OpenCV 4.8.0 has been released recently. Also, OpenVINO just released 2023.0.1 last week so it’s a good time to see how they can be used together to perform inference on a IR optimised model. If you haven’t installed OpenVINO yet, you can learn how to do it here. If you haven’t installed OpenCV, you can follow this guide.

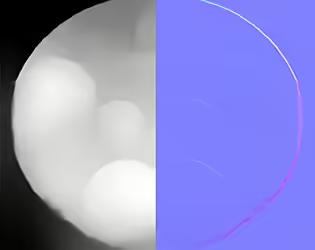

For this, I’m going to use a monocular depth estimation model, MiDaS. This model takes as input a color image, and it outputs an inverse depth estimation for every pixel. The closer the object is to the camera, the lighter the pixel, and vice-versa. It looks like this:

Let’s grab the original ONNX model and convert it to the Intermediate Representation(IR) to be used with OpenVINO:

omz_downloader --name midasnet

omz_converter --name midasnet

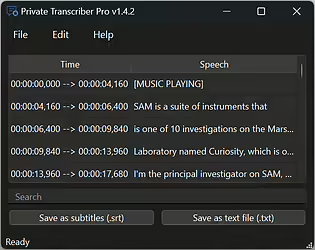

We can now use OpenVINO from inside OpenCV’s DNN module:

cv::dnn::Net net = cv::dnn::readNetFromModelOptimizer("../public/midasnet/FP32/midasnet.xml", "../public/midasnet/FP32/midasnet.bin");

net.setPreferableBackend(cv::dnn::Backend::DNN_BACKEND_INFERENCE_ENGINE);

Then we can proceed exactly as how we would normally do with the OpenCV DNN module:

cv::Mat blob = cv::dnn::blobFromImage(originalImage, 1., cv::Size(384, 384));

net.setInput(blob);

cv::Mat output = net.forward();

And that’s pretty much all you need to use OpenVINO from inside OpenCV’s DNN module. It’s basically almost the same, you only need to change how to read the model, and set the back-end to use the Inference Engine instead of the default OpenCV DNN one.

0 Responses

Stay in touch with the conversation, subscribe to the RSS feed for comments on this post.