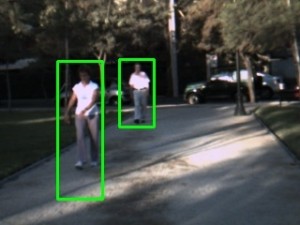

Human detection using stereo vision

Human detection is a key process when trying to achieve a fully automated social robot. Currently most approaches to human detection by a social robot are based on visual features derived from the human faces. These approaches have one major impediment, in order to be detected the user must be facing the camera. This thesis presents a new approach based on novel features provided by an attention system using the entire human body. A human detection system is constructed in order to test the proposed features.

Depth map of the scene

This system is composed of four modules: (i) Preprocessing, (ii) stereo-based segmentation, (iii) novel attention-based feature extraction, and (iv) neural network-based classification. The results show that the proposed system: (i) Improves the detection rate and decreases the false positive rate compared to previous works based on different visual features. (ii) Detects people in different poses, obtaining a considerably higher detection rate than commonly used human detection modules in social robots which often rely on face detection; (iii) Runs in real time; and (iv) Operates on board a mobile platform such as a social robot.

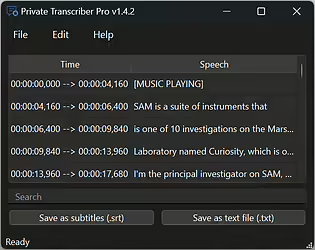

Here you can see a video about this investigation that resulted in a paper published in the IMAVIS ISI journal.

I am so interested in this work. Is the source code available?

Hello Do Nga,

The source code for the basis of the thesis, which is the saliency part, is available here:

http://www.samontab.com/web/saliency/

The full source code is not publicly available since it mostly deals with custom hardware and other internal stuff not of general interest.

The libraries used were OpenCV for image processing and FANN for the learning part. You can get those in their respective websites:

http://opencv.org/

http://leenissen.dk/fann/wp/

Hi Sam! I am wondering how doest it distinguish between humans and other moving objects, e.g. animals?

Hello Ziga,

It detects humans because the system has been trained with examples of how humans look through the camera using a new feature based on saliency.

You can read the thesis for a more detailed explanation.

Does it involve Machine Learning also? Because you are saying that the machine has been trained with a few examples of how humans look through camera.

Yes. I used a neural network to learn from hundreds of examples.