If you have a Wi-Fi capable Nokia Symbian phone with a memory card and also a Wi-Fi router, you can use them to serve your music at home. This is a great way of having all your music in just one place and access it from any wireless capable device (i.e. laptops) as well as sharing it with others at your house. Also, you could still enjoy the same music on the go since it is stored on your mobile phone. This means that you can turn an old unused mobile phone into a very small and noiseless music server for free.

OK, the first step is to install PAMP. This is a web server for mobile phones. It contains Apache, MySQL and PHP, all in one nice installable sis package for your phone.

To install it, just go here and download the file named pamp_1_0_2.zip (not the SDK one).

Extract the files. Notice that there are three .sis files. First install pips_nokia_1_3_SS.sis, then install ssl.sis and finally install pamp_1_0_2.sis.

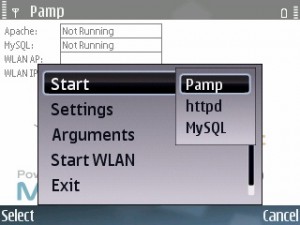

Now you should have a PAMP application on your phone. Open it. You should see something like this:

Now, click on Options and then select Start->Pamp. Answer Yes to the Start WLAN? question and select your home wireless network.

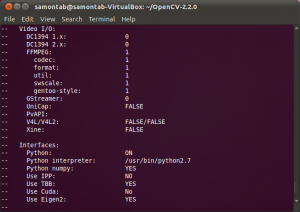

You should now see that Apache and MySQL services are running, the name of your wireless network and the assigned IP number. That number is the one you need to connect to your phone. Write it down. It should look something like this:

Let the PAMP application running on the background as is. You can do that by just pressing your Home button. Now let’s check that everything is working so far. In your laptop open up Firefox (or any other web browser) and type in the IP address from the previous step. You should see something like this:

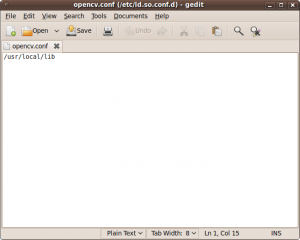

If you see the something similar, the Apache server is working. Now follow the phpinfo.php link. It should display information about your mobile server (cool, isn’t it) like this:

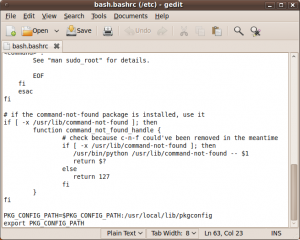

The web pages that you are looking at now are stored in the phone at E:/DATA/apache/htdocs. E: represents the memory card. This is the public folder that is being served by PAMP. The index.html file is the home page being displayed, and phpinfo.php is the link you just visited.

You may edit these web pages if you wish. You can install Y-Browser to navigate your documents or create folders in your phone. Also, you can install ped for editing text files on your phone (this one requires Python for S60 to be installed first). You can also edit the files on your PC and then transfer them back to your phone.

The next step is to download whispercast which is a lightweight PHP script for music streaming, perfect for our needs (Thanks Manas Tungare for making this cool script!). On your Desktop, create a folder called music (it has to be exactly this name to make it work without configuring anything else). Extract all the files from the zip you just downloaded into this folder. Now add all the mp3 files that you want into the music folder as well. Each directory of mp3 files will be a play-list.

Now you need to transfer the entire music folder (not just the contents, the folder itself too) into the phone, inside the E:/DATA/apache/htdocs folder.

It is now time to go to your laptop and type in the IP address followed by /music. For example, if your IP is 192.168.0.100, then you need to go to 192.168.0.100/music. You should now see the text Manas Tungare’s Music Library, with the list of your mp3 files. Navigate to the folder/play-list you want to hear and click on Start Playing. It will create a play-list on the fly and ask you to select the music player that you want to use. If in doubt, just select the default player. After that, you can just save the play-list and double click on it or create a new one visiting the same page.

If you need to tweak some parameters, just edit the config.php file. For changing the format or text displayed, you can edit the other files.

That’s it, now you can listen to your music from anywhere in your house with a small and noise free music server. It should work for Linux, Windows, Mac, or even other mobile phones or tablets.